The rapid spread of “fake news” and online misinformation is a growing threat to the democratic process (Lewandowsky, Ecker, and Cook, 2017; van der Linden, et al., 2017a; Iyengar and Massey, 2018), and can have serious consequences for evidence-based decision making on a variety of societal issues, ranging from climate change and vaccinations to international relations (Poland and Spier, 2010; van der Linden, 2017; van der Linden et al., 2017b; Lazer et al., 2018). In some countries, the rapid spread of online misinformation is posing an additional, physical danger, sometimes leading to injury and even death. For example, false kidnapping rumours on WhatsApp have led to mob lynchings in India (BBC News, 2018a; Phartiyal, Patnaik, and Ingram, 2018).

Social media platforms have proven to be a particularly fertile breeding ground for online misinformation. For example, recent estimates suggest that about 47 million Twitter accounts (~15%) are bots (Varol et al., 2017). Some of these bots are used to spread political misinformation, especially during election campaigns. Recent examples of influential misinformation campaigns include the MacronLeaks during the French presidential elections in 2017 (Ferrara, 2017), the PizzaGate controversy during the 2016 U.S. Presidential elections, and rumours circulating in Sweden about the country’s cooperation with NATO (Kragh and Åsberg, 2017).

A broad array of solutions have been proposed, ranging from making digital media literacy part of school curricula (Council of Europe, 2017; Select Committee on Communications, 2017), to the automated verification of rumours using machine learning algorithms (Vosoughi, Mohsenvand, and Roy, 2017) to conducting fact-checks in real-time (Bode and Vraga, 2015; Sethi, 2017). However, decades of research on human cognition finds that misinformation is not easily corrected. In particular, the continued influence effect of misinformation suggests that corrections are often ineffective as people continue to rely on debunked falsehoods (Nyhan and Reifler, 2010; Lewandowsky et al., 2012). Importantly, recent scholarship suggests that false news spreads faster and deeper than true information (Vosoughi, Roy, and Aral, 2018). Accordingly, developing better debunking and fact-checking tools is therefore unlikely to be sufficient to stem the flow of online misinformation (Chan et al., 2017; Lewandowsky, Ecker, and Cook, 2017).

In fact, the difficulties associated with “after-the-fact” approaches to combatting misinformation have prompted some researchers to explore preemptive ways of mitigating the problem (Cook, Lewandowsky, and Ecker, 2017; van der Linden et al., 2017b; Roozenbeek and van der Linden, 2018). The main thrust of this research is to prevent false narratives from taking root in memory in the first place, focusing specifically on the process of preemptive debunking or so-called “prebunking”.

Originally pioneered by McGuire in the 1960s (McGuire and Papageorgis, 1961, 1962; McGuire, 1964; Compton, 2013), inoculation theory draws on a biological metaphor: just as injections containing a weakened dose of a virus can trigger antibodies in the immune system to confer resistance against future infection, the same can be achieved with information by cultivating mental antibodies against misinformation. In other words, by exposing people to a weakened version of a misleading argument, and by preemptively refuting this argument, attitudinal resistance can be conferred against future deception attempts. Meta-analytic research has found that inoculation messages are generally effective at conferring resistance against persuasion attempts (Banas and Rains, 2010).

Importantly, inoculation theory was developed well before the rise of the internet and traditionally, research has focused on protecting “cultural truisms”, or beliefs so widely held that they are seldom questioned (“it’s a good idea to brush your teeth”, McGuire, 1964). In fact, the initial inoculation metaphor was mostly applied to situations in which people had supportive preexisting beliefs and attitudes toward an issue. Only recently have researchers begun to extend inoculation theory to more controversial issues where people are likely to hold vastly different and often polarised belief structures, for example in the context of climate change (van der Linden et al., 2017b), biotechnology (Wood, 2007), and conspiracy theories (Banas and Miller, 2013; Jolley and Douglas, 2017).

Crucially, this line of work finds that inoculation can still be effective even when applied to those individuals who have already been exposed to misinformation (Cook, Lewandowsky, and Ecker, 2017; Jolley and Douglas, 2017; van der Linden et al., 2017b). Conceptually, this approach is analogous to the emerging use of “therapeutic vaccines” administered to those who already have the disease. Therapeutic vaccines can bolster host defenses and still induce antiviral immunity (e.g., in the context of chronic infections and some cancers, see Autran et al., 2004). Similarly, those who already carry an informational “virus” can still benefit from inoculation treatments and become less susceptible to future persuasion and deception attempts. Recent advances in inoculation theory have called for both prophylactic and therapeutic tests of inoculation principles (Compton, 2019), which is especially relevant in the context of fake news and misinformation.

Yet, thus far, scholarship has primarily focused on inoculating study participants against persuasion attempts pertaining to a particular topic, such as climate change (van der Linden et al., 2017b) or 9/11 conspiracies (Banas and Miller, 2013). Although consistent with the initial theory, this approach presents fundamental problems in terms of both the scalability (Bonetto et al., 2018) and generalisability of the “vaccine” across issue domains (Roozenbeek and van der Linden, 2018). For example, recent work indicates that issuing a general warning before exposing participants to misinformation can offer a significant inoculation effect in itself (Bolsen and Druckman, 2015; Cook, Lewandowsky and Ecker, 2017; van der Linden et al., 2017b). This is consistent with a larger literature on the effectiveness of forewarnings and refutation in correcting misinformation (Ecker, Lewandowsky, and Tang, 2010; Walter and Murphy, 2018).

Importantly, by extending the interpretation of the immunisation metaphor, inoculation could provide a “broad-spectrum vaccine” against misinformation by focusing on the common tactics used in the production of misinformation rather than just the content of a specific persuasion attempt. For example, inoculation messages are known to spill-over to related but untreated attitudes, offering a “blanket of protection” (McGuire, 1964; Parker, Rains, and Ivanov, 2016). Moreover, recent research has provided some support for the idea that inoculation can emerge through exposing misleading arguments (Cook, Lewandowsky, and Ecker, 2017). Thus, we hypothesise that by exposing the general techniques that underlie many (political) persuasion attempts, broad-scale attitudinal resistance can be conferred. In considering how resistance is best promoted, it is important to note that prior research has primarily relied on providing passive (reading) rather than active (experiential) inoculations (Banas and Rains, 2010). In other words, participants are typically provided with the refutations to a certain misleading argument. However, as McGuire hypothesised in the 1960s (McGuire and Papageorgis, 1961), active refutation, where participants are prompted to actively generate pro- and counter-arguments themselves may be more effective, as internal arguing is a more involved cognitive process. This is relevant because inoculation can affect the structure of associative memory networks, increasing nodes and linkages between nodes (Pfau et al., 2005).

Building on this line of work, we are the first to implement the principle of active inoculation in an entirely novel experiential learning context: the Fake News Game, a “serious” social impact game that was designed to entertain, as well as educate. Previous work has shown that social impact games are capable of prompting behavioural change, for example, in the domain of health (Thompson et al., 2010). We posit that providing cognitive training on a general set of techniques within an interactive and simulated social media environment will help people apply these skills across a range of issue domains.

Accordingly, we developed a novel psychological intervention that aimed to confer cognitive resistance against fake news strategies. The intervention consisted of a freely accessible browser game that takes approximately 15 minutes to complete. The game, called Bad News, was developed in collaboration with the Dutch media platform DROG (DROG, 2018; BBC, 2018b). The game engine is capable of rendering text boxes, images, and Twitter posts to simulate the spread of online news and media. The game is choice-based: players are presented with various options that will affect their pathway throughout the game. Figure 1 shows a screenshot of the game’s landing page.

The “Bad News Game” intro screen (www.getbadnews.com)

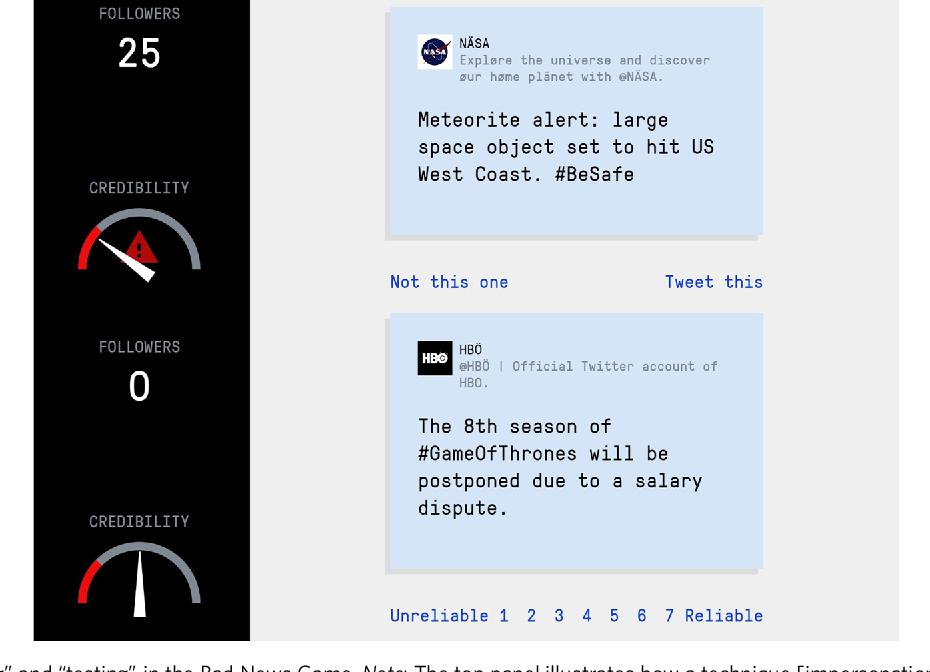

In the game, players take on the role of a fake news creator. The purpose is to attract as many followers as possible while also maximising credibility. The follower and credibility metres along with a screenshot of the game environment are shown in Fig. 2.

Example tweet in the Bad News Game. Note: Follower and credibility metres are shown on the left-hand side

Throughout the game, players gain followers and credibility by going through a number of scenarios, each focusing on one of six strategies commonly used in the spread of misinformation (NATO StratCom, 2017). At the end of each scenario, players earn a specific fake news badge (an overview of the fake news badges is provided in Fig. 3). Players are rewarded for making use of the strategies that they learn in the game, and are punished (in terms of losing credibility or followers) for choosing options in line with ethical journalistic behaviour. They gradually go from being an anonymous social media presence to running a (fictional) fake news empire. Players lose if their credibility drops to 0. The total number of followers at the end of the game counts as their final score.

The 6 badges that players earn throughout the game after successfully mastering a misinformation technique

There are six badges for players to earn, each reflecting a common misinformation strategy (see Fig. 3). The first badge is called “impersonation” and covers deception in the form of impersonating online accounts. This includes posing as a real person or organisation by mimicking their appearance, for example by using a slightly different username. This technique is commonly used on social media platforms, for example when impersonating celebrities, politicians, or in certain money and various other online scams (Goga, Venkatadri, and Gummadi, 2015; Jung, 2011; Reznik, 2013).

The second badge covers provocative emotional content: Producing material that deliberately plays into basic emotions such as fear, anger, or empathy, in order to gain attention or frame an issue in a particular way. Research shows that emotional content leads to higher engagement and is more likely to go viral and be remembered by news consumers (Aday, 2010; Gross and D’Ambrosio, 2004; Konijn, 2013; Zollo et al., 2015).

The third badge teaches players about group polarisation: Artificially amplifying existing grievances and tensions between different groups in society, for example political differences, in order to garner support for or antagonism towards partisan viewpoints and policies (Groenendyk, 2018; Iyengar and Krupenkin, 2018; Melki and Pickering, 2014; Prior, 2013).

The fourth badge lets players float their own conspiracy theories: Creating or amplifying alternative explanations for traditional news events which assume that these events are controlled by a small, usually malicious, secret elite group of people (Jolley and Douglas, 2017; Lewandowsky, Gignac, and Oberauer, 2013; van der Linden, 2015).

The fifth badge covers the process of discrediting opponents: Deflecting attention away from accusations of bias by attacking or delegitimising the source of the criticism (Rinnawi, 2007; Lischka, 2017), or by denying accusations of wrongdoing altogether (A’Beckett, 2013).

The last badge educates players about the practice of trolling people online. In its original meaning, the term trolling refers to slowly dragging a lure from the back of a fishing vessel in the hope that the fish will bite. In the context of misinformation, it means deliberately inciting a reaction from a target audience by using bait, making use of a variety of strategies from the earlier badges (Griffiths, 2014; McCosker, 2014; Thacker and Griffiths, 2012).